Day 4 Wrap Up from the NEON Data Institute 2017

/Day 4 http://neondataskills.org/data-institute-17/day4/

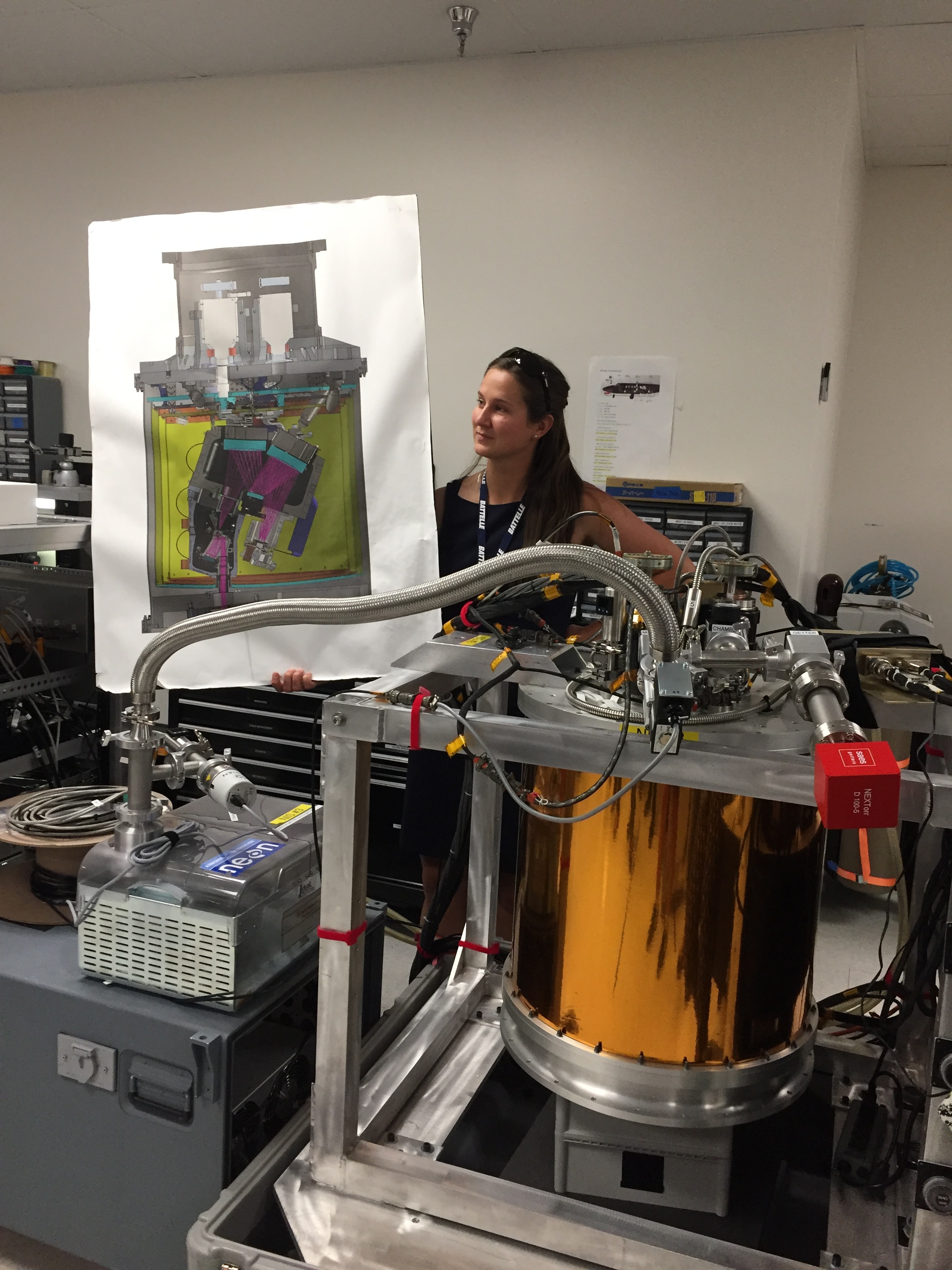

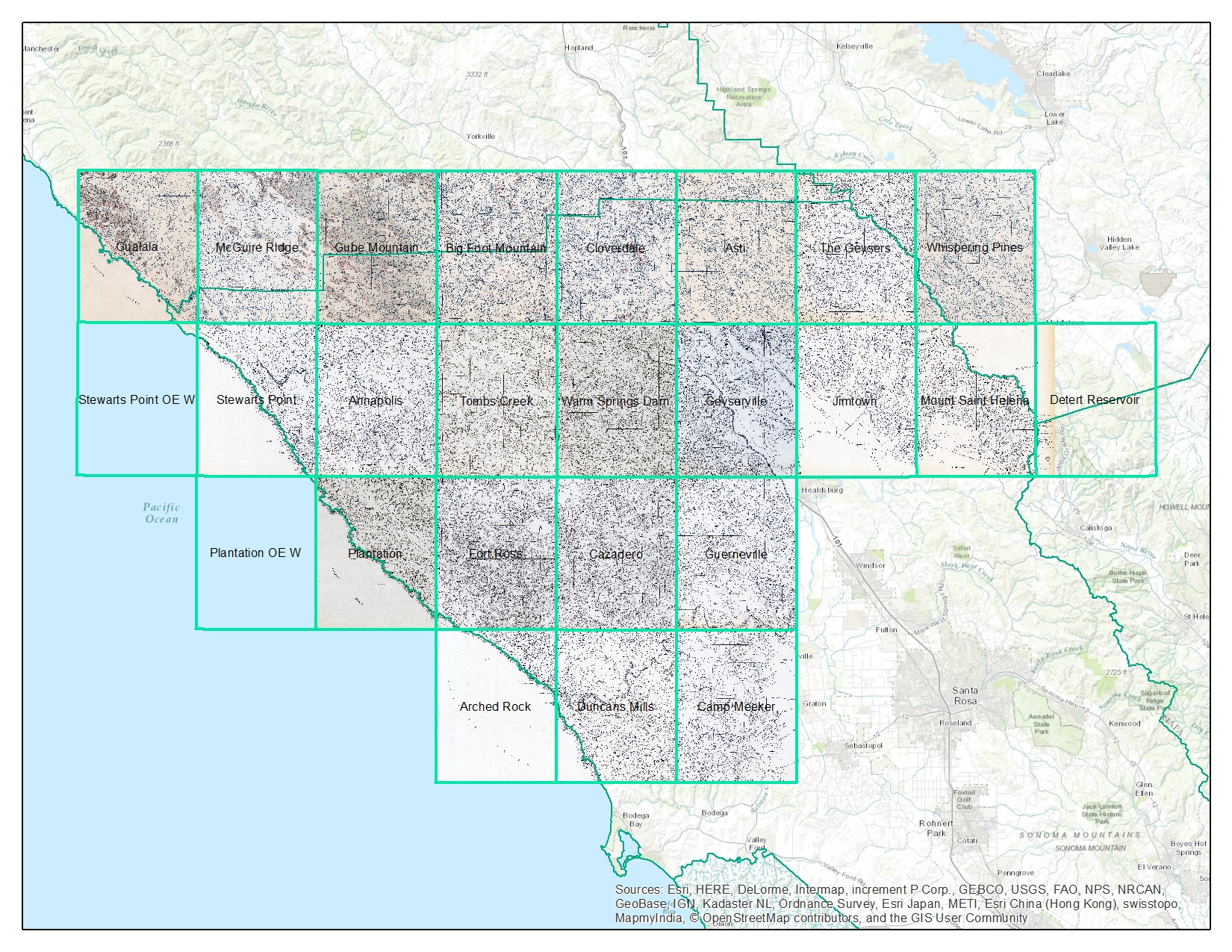

This is it! Final day of LUV-DATA. Today we focused on hyperspectral data and vegetation. Paul Gader from the University of Florida kicked off the day with a survey of some of his projects in hyperspectral data, explorations in NEON data, and big data algorithmic challenges. Katie Jones talked about the terrestrial observational plot protocol at the NEON sites. Sites are either tower (in tower air-shed) or distributed (throughout site). She focused on the vegetation sampling protocols (individual, diversity, phenology, biomass, productivity, biogeochemistry). Data to be released in the fall. Samantha Weintraub talked to us about foliar chemistry data (e.g. C, N, lignin, chlorophyll, trace elements) and linking with remote sensing. Since we are still learning about fundamental controls on canopy traits within and between ecosystems, and we have a poor understanding of their response to global change, this kind of NEON work is very important. All these foliar chemistry data will be released in the fall. She also mentioned the extensive soil biogeochemical and microbial measurements in soil plots (30cm depth) again in tower and distributed plots (during peak greenness and 2 seasonal transitions).

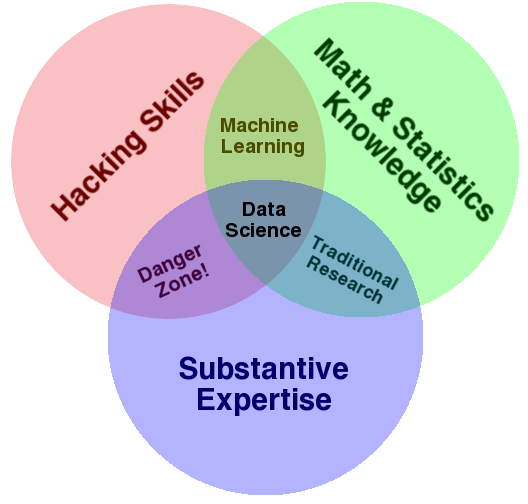

The coding work focused on classifying spectra (Classification of Hyperspectral Data with Ordinary Least Squares in Python), (Classification of Hyperspectral Data with Principal Components Analysis in Python) and (Using SciKit for Support Vector Machine (SVM) Classification with Python), using our new best friend Jupyter Notebooks. We spent most of the time talking about statistical learning, machine learning and the hazards of using these without understanding of the target system.

Fun additional take-home messages/resources:

- NEON data seems like a tremendous resource for research and teaching. Increasing amounts of data are going to be added to their data portal. Stay tuned: http://data.neonscience.org/home

- NRC has collaborated with NEON to do some spatially extensive soil characterization across the sites. These data will also be available as a NEON product.

- Fore more on when data rolls out, sign up for the NEON eNews here: http://www.neonscience.org/

Thanks to everyone today! Megan Jones (ran a flawless workshop), Paul Gader (remote sensing use cases/classification), Katie Jones (NEON terrestrial vegetation sampling), Samantha Weintraub (foliar chemistry data).

And thanks to NEON for putting on this excellent workshop. I learned a ton, met great people, got re-energized about reproducible workflows (have some ideas about incorporating these concepts into everyday work), and got to spend some nostalgic time walking around my former haunts in Boulder.