SNAMP spatial newsletter on lidar

/This is an exerpt from an older SNAMP newsletter Marek and I wrote describing the use of lidar in our Sierra Nevada Adaptive Management Project. Originally published November 2008.

Environmental sciences are inherently spatial, and geospatial tools such as Geographical Information Systems (GIS), Global Positioning Systems (GPS) and remote sensing are fundamental to these research enterprises. Remote sensing has been used for forest and habitat mapping for a long time, and new technological developments such as LIDAR (light detection and ranging) are making this field even more exciting. Here we briefly describe LIDAR’s basic principles and show some preliminary analyses completed for the SNAMP Project. We are using this data to model detailed topography to help the water team understand runoff in the SNAMP watersheds, to map forest canopy cover and vegetation height as inputs to the fire and forest health team’s detailed fire models, and to derive important forest habitat characteristics for the spotted owl and fisher teams.

We contracted with the National Center for Airborne LIDAR Mapping (NCALM) for our data. They flew the GEMINI instrument at approximately 600 m above ground level, with 67% swath overlap. The instrument collected 4 discrete returns per pulse at 125kHz, and the data has a final

density of 9 points per m2.

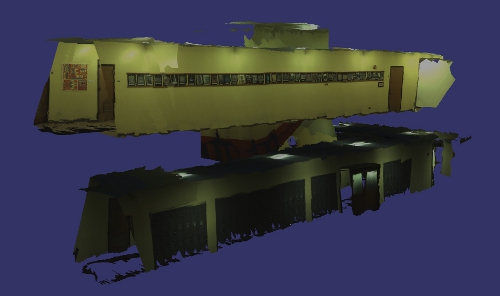

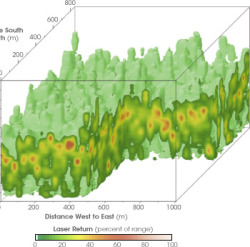

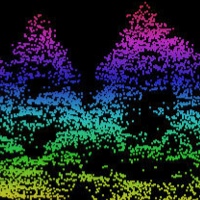

Raw Data: LIDAR data is typically delivered as a “point cloud,” a collection of elevations and their intensities that can be projected in a three-dimensional space. In Figure 2 (right) we show this “point cloud” concept. There are thousands of individual points in the image, each colored according to its height (magenta and red are high, orange and yellow are low).

Raw Data: LIDAR data is typically delivered as a “point cloud,” a collection of elevations and their intensities that can be projected in a three-dimensional space. In Figure 2 (right) we show this “point cloud” concept. There are thousands of individual points in the image, each colored according to its height (magenta and red are high, orange and yellow are low).

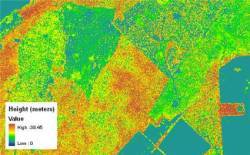

Bare Earth: Once the data is collected, the first step is to transform the data into a “bare earth” model; which is an approximation of the ground if all objects above surface are removed. We use the “Last Return” data (see Figure 1 above) to generate this model of the bare earth. These are typically very detailed products (with a small footprint on the ground) and provide much more topographic information than from Digital Elevation Models (DEMs) that were derived from topographic maps. Our DEM has a ground resolution of under 1m.

Bare Earth: Once the data is collected, the first step is to transform the data into a “bare earth” model; which is an approximation of the ground if all objects above surface are removed. We use the “Last Return” data (see Figure 1 above) to generate this model of the bare earth. These are typically very detailed products (with a small footprint on the ground) and provide much more topographic information than from Digital Elevation Models (DEMs) that were derived from topographic maps. Our DEM has a ground resolution of under 1m.

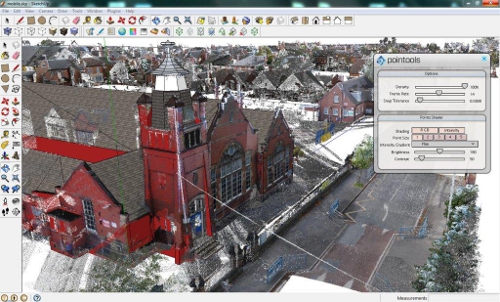

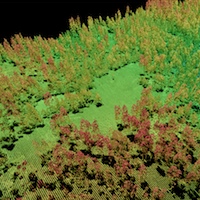

Forest Structure: Another typical step in processing LIDAR data is to examine individual trees and forest structure. An example of a forest stand is shown in Figure 4. These and other products help us understand how the forest influences surface hydrology, how a patch of forest might provide habitat for a fisher and how a forest might burn given certain weather and wind patterns.

Forest Structure: Another typical step in processing LIDAR data is to examine individual trees and forest structure. An example of a forest stand is shown in Figure 4. These and other products help us understand how the forest influences surface hydrology, how a patch of forest might provide habitat for a fisher and how a forest might burn given certain weather and wind patterns.

Future Analyses: We are in the process of linking the forest parameters gathered by the Fire & Forest Ecosystem Health Team in summer 2008 with the LIDAR-derived data to help scale-up forest variables to the fireshed scale. For example, tree height, tree DBH (diameter-at- breast-height) and canopy cover have been successfully modeled using LIDAR data in other studies, and there is active research linking field-based and LIDAR-based fire-related measures such as canopy base height and ladder fuels, and wildlife-related measures such as vertical structure.