SPUR 2021 update: Mapping changes to police spending in California

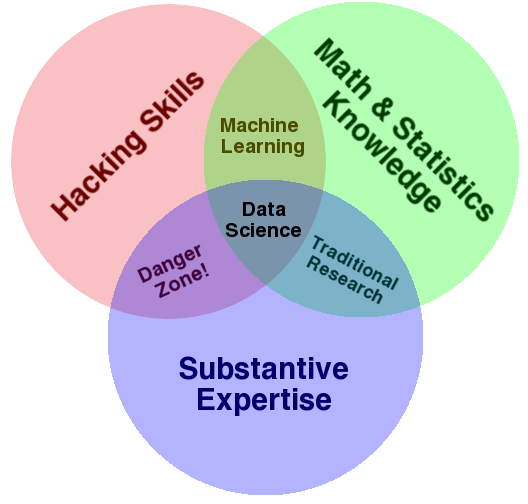

/The Fall 2020 UC Berkeley’s Rausser College of Natural Resources Sponsored Project for Undergraduate Research (SPUR) project “Mapping municipal funding for police in California” continued in Spring 2021 with the Kellylab. This semester we continued our work with Mapping Black California (MBC), the Southern California-based collective that incorporates technology, data, geography, and place-based study to better understand and connect African American communities in California. Ben Satzman, lead in the Fall, was joined by Rezahn Abraha. Together they dug into the data, found additional datasets that helped us understand the changes in police funding from 2014 to 2019 in California and were able to dig into the variability of police spending across the state. Read more below, and here is the Spring 2021 Story Map: How Do California Cities Spend Money on Policing? Mapping the variability of police spending from 2014-2019 in 476 California Cities.

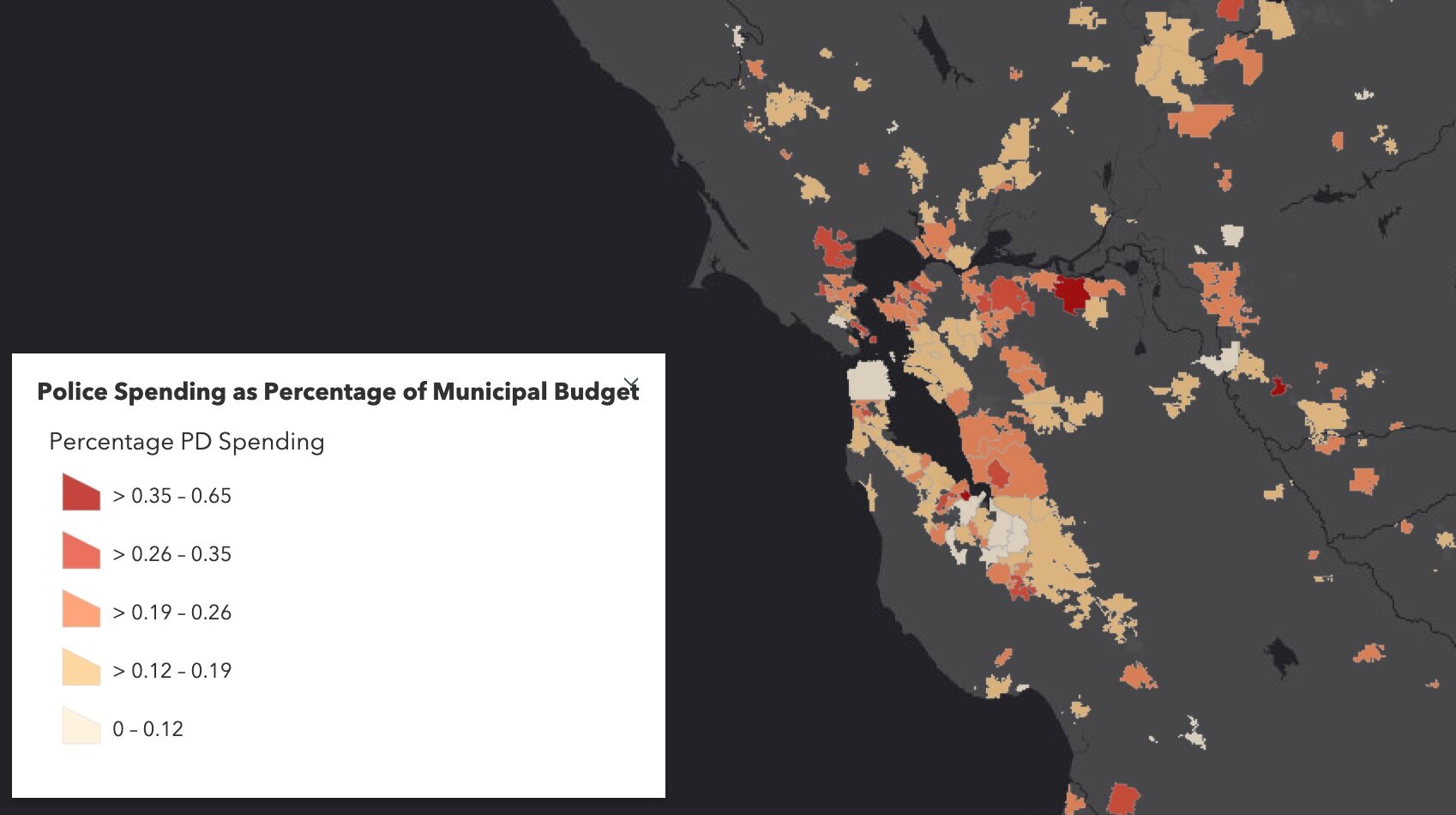

This semester we again met weekly and used data from 476 cities across California detailing municipal police funding in 2014 and 2019. By way of background, California has nearly 500 incorporated cities and most municipalities have their own police departments and create an annual budget determining what percentage their police department will receive. The variability in police spending across the state is quite surprising. This is what we dug into in Fall 2020. In 2019 the average percentage of municipal budgets spent on policing is about 20%, and while some municipalities spent less than 5% of their budgets on policing, others allocated more than half of their budgets to their police departments. Per capita police spending is on average about $500, but varies largely from about $10 to well over $2,000. Check out the Fall 2020 Story Map.

This semester, we set out to see how police department spending changed from 2014 to 2019, especially in relation to population changes from that same 5-year interval. We used the California State Controller's Finance Data to find each city's total expenditures and police department expenditures from 2014 and 2019. This dataset also had information about each city's total population for these given years. We also used a feature class provided by CalTrans that had city boundary GIS data for all incorporated municipalities in California.

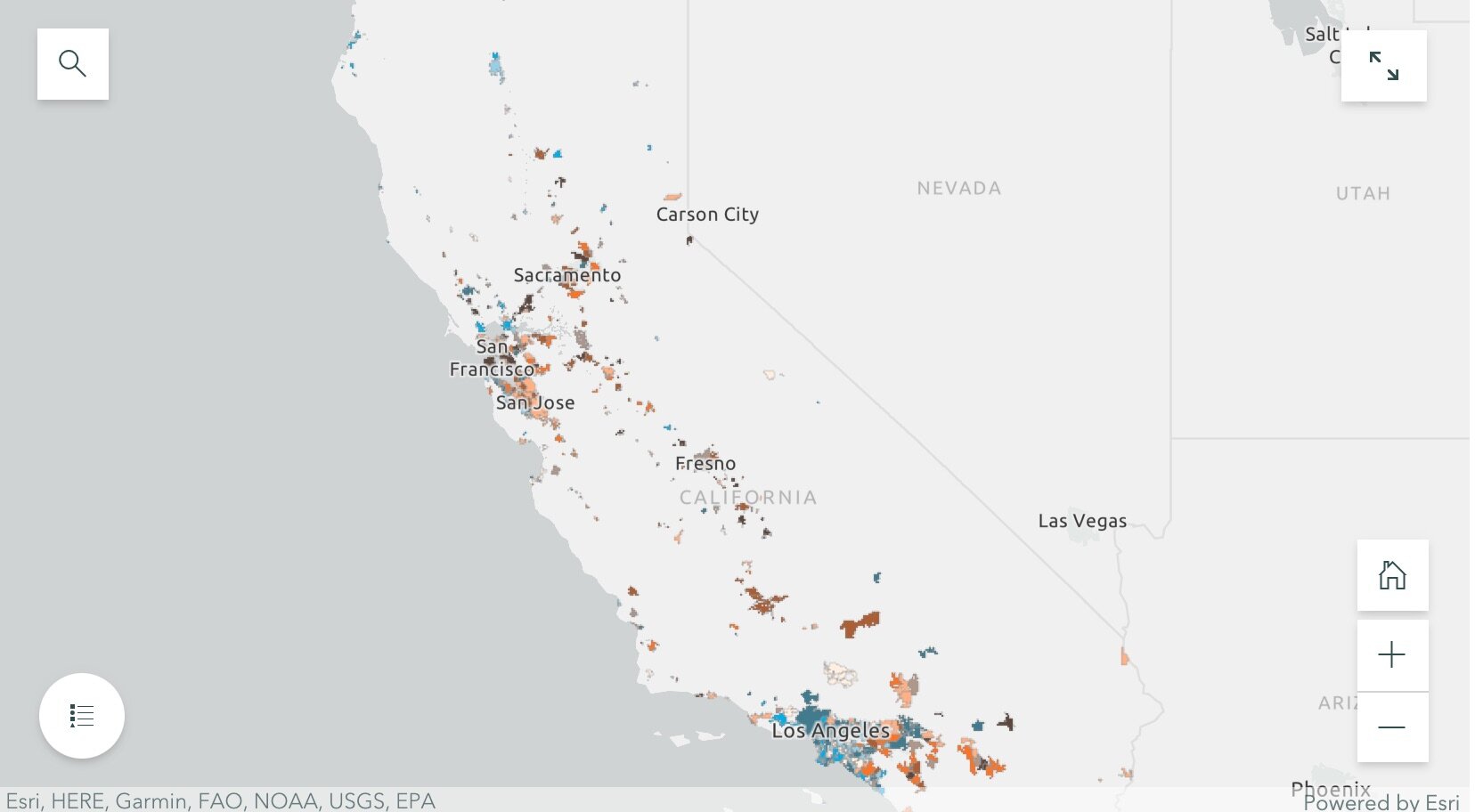

By dividing the police department expenditures by the total city expenditures for both 2014 and 2019, we were able to create a map showing what percentage of their municipal budgets 476 California cities were spending on policing. We were also able to visualize the percentage change in percentage police department spending and population from 2014 to 2019. Changes in police spending (and population change) were not at all consistent across the state. For example, cities that grew sometimes increased spending, but sometimes did not. Ben and Rezahn came up with a useful way of visualizing how police spending and population change co-vary (click on the map above to go to the site), and found 4 distinct trends in the cities examined:

Cities that increased police department (PD) spending, but saw almost no change in population (these are colored bright blue in the map);

Cities that saw increases in population, but experienced little or negative change in PD spending (these are bright orange in the map);

Cities that saw increases in both PD spending and population (these are dark brown in the map); and

Cities that saw little or negative change in both PD spending and population (these are cream in the map).

They then dug into southern California and the Bay Area, and selected mid-size cities that exemplified the four trends to tell more detailed stories. These included for the Bay Area: Vallejo (increased police department (PD) spending, but saw almost no change in population), San Ramon (increases in population, but experienced little or negative change in PD spending), San Francisco (increases in both PD spending and population) and South San Francisco (little or negative change in both PD spending and population); and for southern California: Inglewood (increased police department (PD) spending, but saw almost no change in population), Irvine (increases in population, but experienced little or negative change in PD spending), Palm Desert (increases in both PD spending and population), Simi Valley (little or negative change in both PD spending and population). Check out the full Story Map here, and read more about these individual cities.

The 5-year changes in municipal police department spending are challenging to predict. Cities with high population growth from 2014 to 2019 did not consistently increase percentage police department spending. Similarly, cities that experienced low or even negative population growths varied dramatically in percentage change police department spending. The maps of annual police department spending percentages and 5-year relationships allowed us to identify these complexities, and will be an important source of future exploration.

The analysts on the project were Rezahn Abraha, a UC Berkeley Society and Environment Major, and Ben Satzman, a UC Berkeley Conservation and Resource Studies Major with minors in Sustainable Environmental Design and GIS. Both worked in collaboration with MBC and the Kellylab to find, clean, visualize, and analyze statewide data. Personnel involved in the project are: from Mapping Black California - Candice Mays (Partnership Lead), Paulette Brown-Hinds (Director), Stephanie Williams (Exec Editor, Content Lead), and Chuck Bibbs (Maps and Data Lead); from the Kellylab: Maggi Kelly (Professor and CE Specialist), Chippie Kislik (Graduate Student), Christine Wilkinson (Graduate Student), and Annie Taylor (Graduate Student).

We thank the Rausser College of Natural Resources who funded this effort.

Fall 2020 Story Map: Mapping Police Spending in California Cities. Examine Southern California and the Bay Area in detail, check out a few interesting cities, or search for a city and click on it to see just how much they spent on policing in 2017.

Spring 2021 Story Map: How Do California Cities Spend Money on Policing? Mapping the variability of police spending from 2014-2019 in 476 California Cities.