NLCD released and webinar April 15 2014

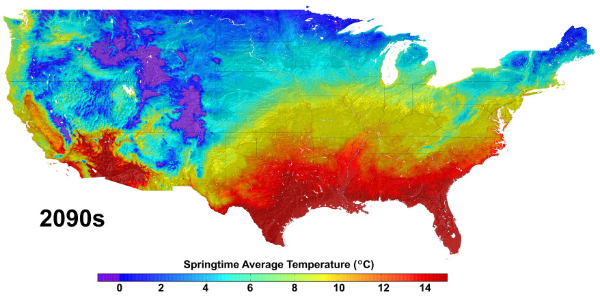

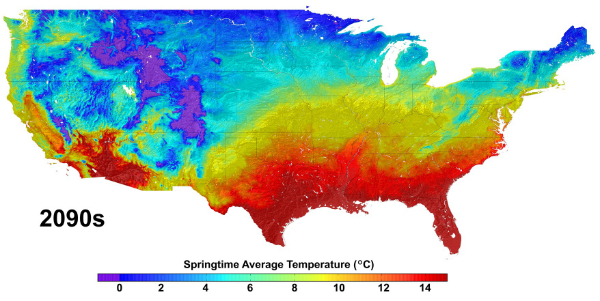

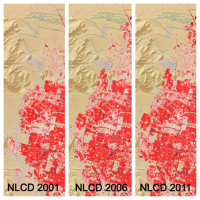

/ These three panels of cyclical data (2001, 2006, 2011) from the National Land Cover Database depict intervals of land cover change in the vicinity of Spring Valley, a suburb of Las Vegas, NV.[Source: USGS] Just released, the latest edition of the nation’s most comprehensive look at land-surface conditions from coast to coast shows the extent of land cover types from forests to urban areas. The National Land Cover Database (NLCD 2011) is made available to the public by the U.S. Geological Survey and partners.

These three panels of cyclical data (2001, 2006, 2011) from the National Land Cover Database depict intervals of land cover change in the vicinity of Spring Valley, a suburb of Las Vegas, NV.[Source: USGS] Just released, the latest edition of the nation’s most comprehensive look at land-surface conditions from coast to coast shows the extent of land cover types from forests to urban areas. The National Land Cover Database (NLCD 2011) is made available to the public by the U.S. Geological Survey and partners.

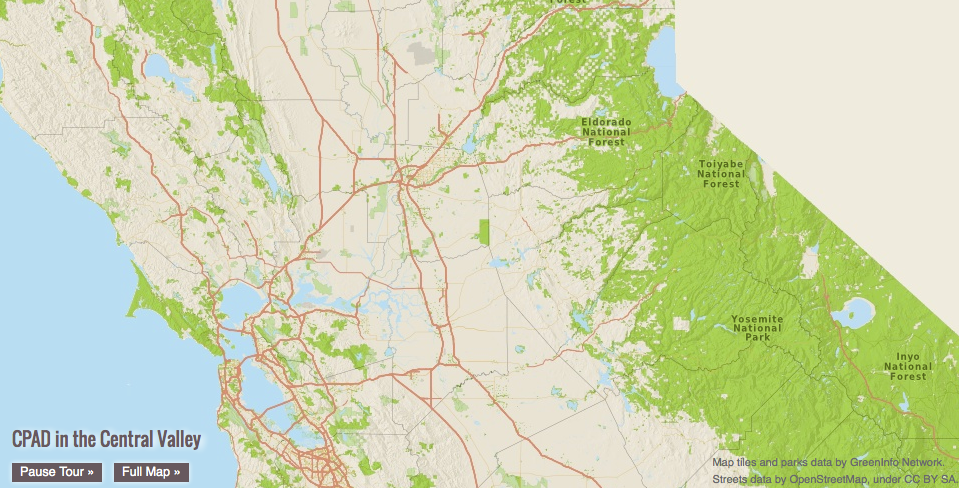

Dividing the lower 48 states into 9 billion geographic cells, the massive database provides consistent information about land conditions at regional to nationwide scales. Collected in repeated five-year cycles, NLCD data is used by resource managers and decision-makers to conduct ecosystem studies, determine spatial patterns of biodiversity, trace indications of climate change, and develop best practices in land management.

Based on Landsat satellite imagery taken in 2011, NLCD 2011 describes the land cover of each 30-meter cell of land in the conterminous United States and identifies which ones have changed since the year 2006. Nearly six such cells — each 98 feet long and wide — would fit on a football field. Land cover is broadly defined as the biophysical pattern of natural vegetation, agriculture, and urban areas. It is shaped by both natural processes and human influences. NLCD 2011 updates the previous database version, NLCD 2006.

Webinar about the release will be Tuesday, April 15, 2014, 2:00 PM Eastern Time: "New Version of the National Land Cover Database - April 4, 2014 Release”

The latest version of the National Land Cover Database (NLCD) for the conterminous United States will be publicly released on April 4, 2014. NLCD 2011 is the most up-to-date and extensive iteration of the National Land Cover Database, the definitive Landsat-based, 30-meter resolution land cover database for the Nation. NLCD 2011 products are completely integrated with those of previous versions (2001, 2006), providing a 10-year record of change for the Nation. Products include 16 classes of land cover, the percent of imperviousness in urban areas, and the percent of tree canopy cover. NLCD is constructed by the 10-member federal interagency Multi-Resolution Land Characteristics (MRLC) Consortium. This seminar will highlight the new features of NLCD 2011 and the related applicationsCollin Homer, 605-594-2714, homer@usgs.gov)

For more information and to download NLCD data, visit http://www.mrlc.gov/.

Please click the following link to join the webinar:

https://usgs.webex.com/usgs/j.php?ED=279876177&UID=490357047&RT=MiM3

At start time of the webinar, each location must call one of the dial-in numbers:

From the National Center in Reston, dial internally x4848

From all other USGS/DOI locations, dial 703-648-4848

From non DOI locations, dial toll free 855-547-8255

After the voice prompt, please enter the Conference Security Code 73848024 followed by the # key. You will hear a tone confirming that you have successfully joined the conference call. If you weren't successful, you will hear another voice prompt with instructions.

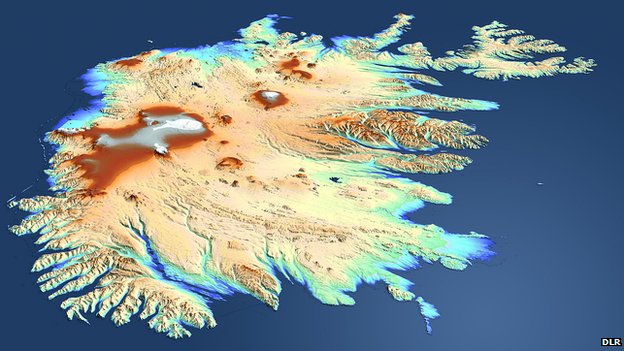

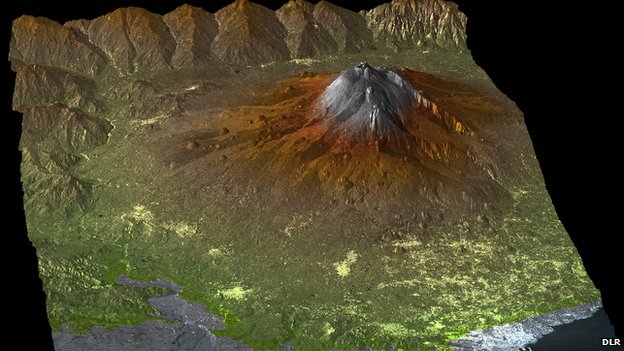

NSF was looking for transformative concepts and approaches to create integrated data management infrastructures across the Geosciences. They were interested in the multifaceted challenges of modern, data-intensive science and education and envision an environment where low adoption thresholds and new capabilities act together to greatly increase the productivity and capability of researchers and educators working at the frontiers of Earth system science. I am not sure if this will be funded in 2014, but the concept reafirms that the concept of the Digital Earth is widespread and will likely be an important part of academia.

NSF was looking for transformative concepts and approaches to create integrated data management infrastructures across the Geosciences. They were interested in the multifaceted challenges of modern, data-intensive science and education and envision an environment where low adoption thresholds and new capabilities act together to greatly increase the productivity and capability of researchers and educators working at the frontiers of Earth system science. I am not sure if this will be funded in 2014, but the concept reafirms that the concept of the Digital Earth is widespread and will likely be an important part of academia.