Day 3 Wrap Up from the NEON Data Institute 2017

/Today we focused on uncertainty. Yay! http://neondataskills.org/data-institute-17/day3/

Tristan Goulden gave a talk on sources of uncertainty in the discrete return lidar data. Uncertainty comes from two main sources: geolocation - horizontal and vertical (e.g. distance from base station, distribution and number of satellites, and accuracy of IMU), and processing (e.g. classification of point cloud, interpolation method ). The NEON remote sensing team has developed tests for each of these error sources. NEON provides with all their lidar data a simulated point cloud error product, with horizontal and vertical error per point in LAS format (cool!). These products show the error is largest at the edges of scans, obvi.

- The take homes are: fly within 20km of a basestation; test your lidar sensor annually; check your boresight; dense canopy make ground point density more sparce, so DTM is problematic; and initial point cloud misclassification can lead to large errors in downstream products. So much more in my notes.

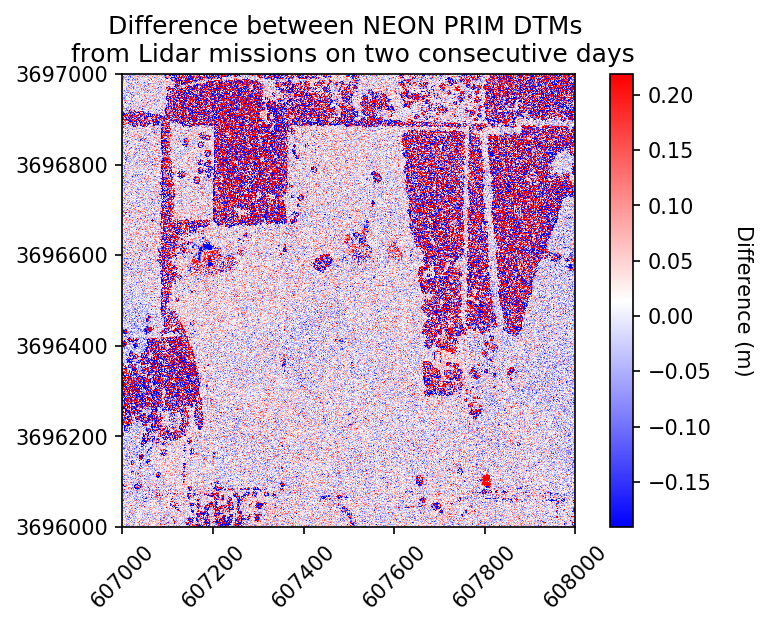

We then coded an example from the PRIN NEON site, where NEON captured lidar data twice within 2 days, and so we could explore how different the data were. Again, we used Jupyter Notebooks and explored the relative differences in DSM and DTM values between the two lidar captures. The differences are random, but non-negligible, at least for DSM. For the DTM, the range = 0.0-20cm; but for the DSM the range = 0.0-1.5. The mean DSM is 6.34m, so the difference can be ~20%. The take home is that despite a 15cm accuracy spec from vendors on vertical accuracies, you can get very different measures on different flights and those can be considerable, especially with vegetation. In fact, NEON meets its 15cm accuracy requirements only in non-vegetated areas. Note, when you download NEON data, you can get line-to-line differences in the NEON lidar metadata, to kind of explore this. But assume if you are in heavily vegetated areas you should expect higher than 15cm error.

After lunch we launched into the NEON Imaging Spectrometer data and uncertainty with Nathan This is something I had not really thought about before this workshop.

We talked about orthorectfication and geolocation, focal plan characterization, spectral calibration and radiometric calibration and all the possible sources of error that can creep into the data, like blurring and ghosting of light. NEON calibrates their data across these areas, and provided information on each. I don't think there are many standards for reporting these kinds of spectral uncertainties.

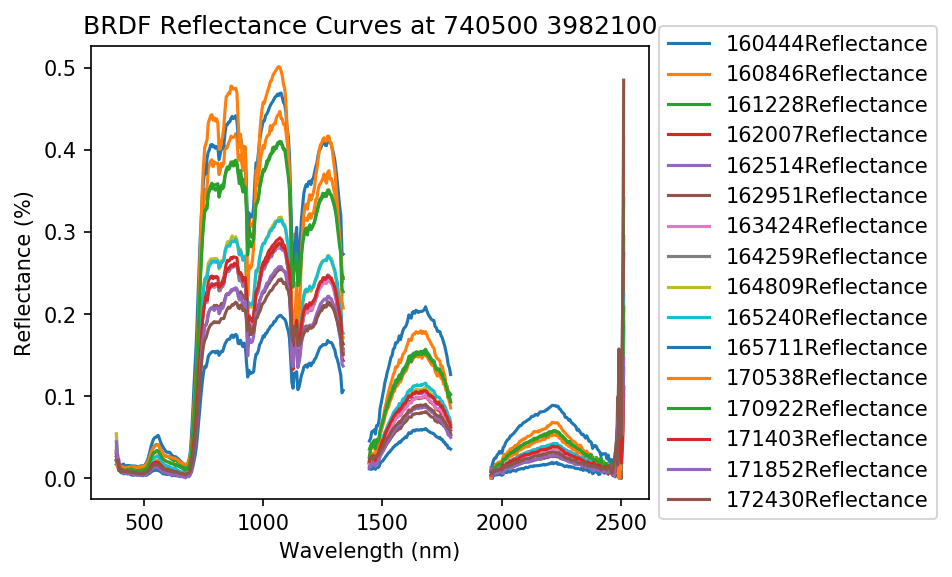

The first live coding exercise (Hyperspectral Variation Uncertainty Analysis in Python) looked at the NEON site F07A, at which NEON acquired 18 individual flights (for BRDF work) over an hour on one day. We used these data and plotted the different spectral reflectance curves for several pixels. For a vegetated pixel, the NIR can vary tremendously! (e.g. 20% reflectance compared to 50% reflectance, depending on time of day, solar angle, etc.) Wow! I should note that the related indices - NDVI, which are ratios, will not be as affected. Also, you can normalize the output using some nifty methods like the Standard Normal Variate (SNV) algorithm, if you have large areas over which you can gather multiple samples.

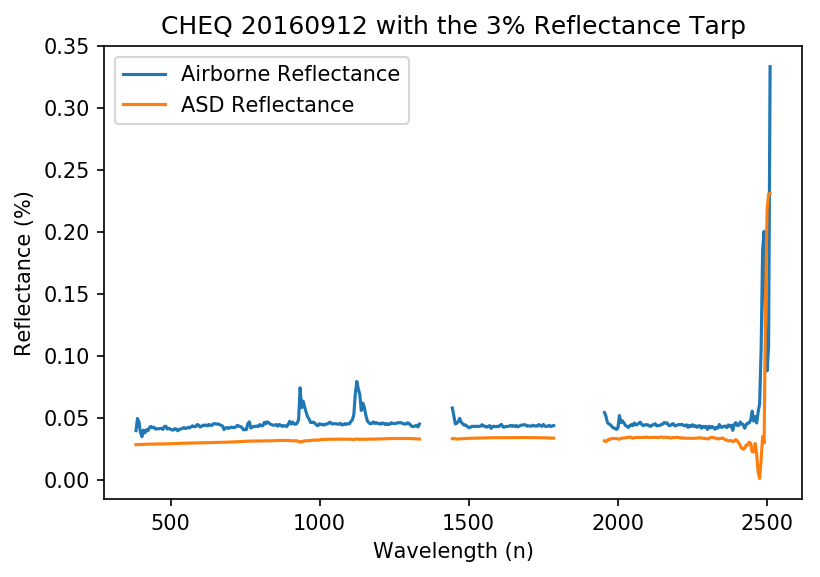

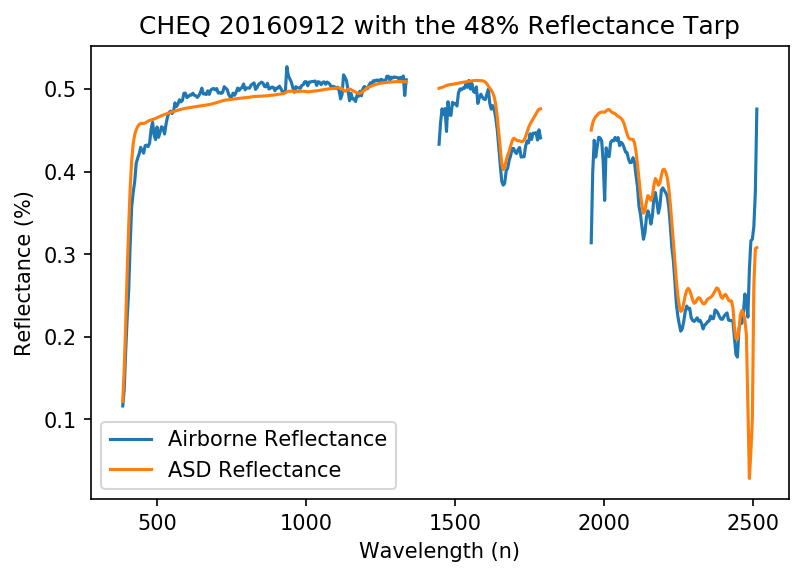

The second live coding exercise (Assessing Spectrometer Accuracy using Validation Tarps with Python) focused on a calibration experiment they conducted at CHEQ for the NIS instrument. They laid out two reflectance tarps - 3% (black) and 48% (white), measured reflectance with an ASD spectrometer, and flew over with the NIS. We compared the data across wavelengths. Results summary: small differences between ASD and NIS across wavelengths; water absorption bands play a role; % differences can be quite high - up to 50% for the black tarp. This is mostly from stray light from neighboring areas. NEON has a calibration method for this (they call it their "de-blurring correction").

Fun additional take-home messages/resources:

- All NEON point cloud classifications are done with LASTools. Go LASTools! https://rapidlasso.com/lastools/

- Check out pdal - like gdal for point clouds. It can be used from bash. Learned from my workshop neighbor Sergio Marconi https://www.pdal.io/

- Reflectance Tarps are made by GroupVIII http://www.group8tech.com/

- ATCOR http://www.rese.ch/products/atcor/ says we should be able to rely on 3-5% error on reflectance when atmospheric correction is done correctly (say that 10 times fast) with a well-calibrated instrument.

- NEON hyperspectral data is stored in HDF5 format. HDFView is a great tool for interrogating the metadata, among other things.

Thanks to everyone today! Megan Jones (our fearless leader), Tristan Goulden (Discrete Lidar Uncertainty and all the coding), Nathan Leisso (spectral data uncertainty), and Amanda Roberts (NEON intern - spectral uncertainty).