Day 1 Wrap Up from the NEON Data Institute 2017

/I left Boulder 20 years ago on a wing and a prayer with a PhD in hand, overwhelmed with bittersweet emotions. I was sad to leave such a beautiful city, nervous about what was to come, but excited to start something new in North Carolina. My future was uncertain, and as I took off from DIA that final time I basically had Tom Petty's Free Fallin' and Learning to Fly on repeat on my walkman. Now I am back, and summer in Boulder is just as breathtaking as I remember it: clear blue skies, the stunning flatirons making a play at outshining the snow-dusted Rockies behind them, and crisp fragrant mountain breezes acting as my Madeleine. I'm back to visit the National Ecological Observatory Network (NEON) headquarters and attend their 2017 Data Institute, and re-invest in my skillset for open reproducible workflows in remote sensing.

Day 1 Wrap Up from the NEON Data Institute 2017

What a day! http://neondataskills.org/data-institute-17/day1/

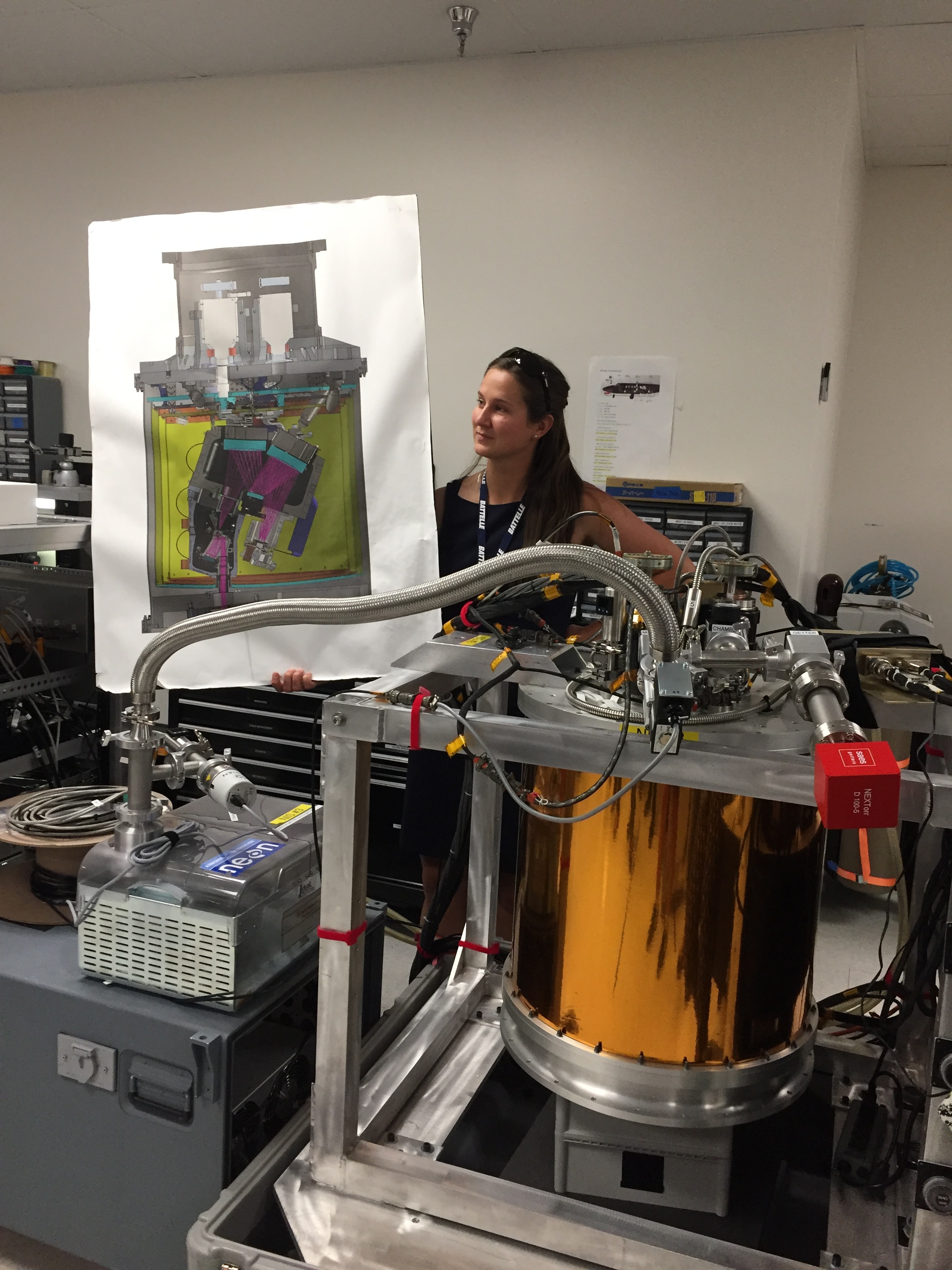

Attendees (about 30) included graduate students, old dogs (new tricks!) like me, and research scientists interested in developing reproducible workflows into their work. We are a pretty even mix of ages and genders. The morning session focused on learning about the NEON program (http://www.neonscience.org/): its purpose, sites, sensors, data, and protocols. NEON, funded by NSF and managed by Battelle, was conceived in 2004 and will go online for a 30-year mission providing free and open data on the drivers of and responses to ecological change starting in Jan 2018. NEON data comes from IS (instrumented systems), OS (observation systems), and RS (remote sensing). We focused on the Airborne Observation Platform (AOP) which uses 2, soon to be 3 aircraft, each with a payload of a hyperspectral sensor (from JPL, 426, 5nm bands (380-2510 nm), 1 mRad IFOV, 1 m res at 1000m AGL) and lidar (Optech and soon to be Riegl, discrete and waveform) sensors and a RGB camera (PhaseOne D8900). These sensors produce co-registered raw data, are processed at NEON headquarters into various levels of data products. Flights are planned to cover each NEON site once, timed to capture 90% or higher peak greenness, which is pretty complicated when distance and weather are taken into account. Pilots and techs are on the road and in the air from March through October collecting these data. Data is processed at headquarters.

In the afternoon session, we got through a fairly immersive dunk into Jupyter notebooks for exploring hyperspectral imagery in HDF5 format. We did exploration, band stacking, widgets, and vegetation indices. We closed with a fast discussion about TGF (The Git Flow): the way to store, share, control versions of your data and code to ensure reproducibility. We forked, cloned, committed, pushed, and pulled. Not much more to write about, but the whole day was awesome!

Fun additional take-home messages:

- NEON is amazing. I should build some class labs around NEON data, and NEON classroom training materials are available: http://www.neonscience.org/resources/data-tutorials

- Making participants do organized homework is necessary for complicated workshop content: http://neondataskills.org/workshop-event/NEON-Data-Insitute-2017

- HDF5 as an possible alternative data format for Lidar - holding both discrete and waveform

- NEON imagery data is FEDExed daily to headquarters after collected

- I am a crap python coder

- #whofallsbehindstaysbehind

- Tabs are my friend

Thanks to everyone today, including: Megan Jones (Main leader), Nathan Leisso (AOP), Bill Gallery (RGB camera), Ted Haberman (HDF5 format), David Hulslander (AOP), Claire Lunch (Data), Cove Sturtevant (Towers), Tristan Goulden (Hyperspectral), Bridget Hass (HDF5), Paul Gader, Naupaka Zimmerman (GitHub flow).