The evolution of a Digital Earth

/In 1998 Al Gore made his now famous speech entitled The Digital Earth: Understanding our planet in the 21st Century. He described the possibilities and need for the development of a new concept in earth science, communication and society. He envisioned technology that would allow us "to capture, store, process and display an unprecedented amount of information about our planet and a wide variety of environmental and cultural phenomena.” From the vantage point of our hyper-geo-emersed lifestyle today his description of this Digital Earth is prescient yet rather cumbersome:

"Imagine, for example, a young child going to a Digital Earth exhibit at a local museum. After donning a head-mounted display, she sees Earth as it appears from space. Using a data glove, she zooms in, using higher and higher levels of resolution, to see continents, then regions, countries, cities, and finally individual houses, trees, and other natural and man-made objects. Having found an area of the planet she is interested in exploring, she takes the equivalent of a "magic carpet ride" through a 3-D visualization of the terrain.”

He said: "Although this scenario may seem like science fiction, most of the technologies and capabilities that would be required to build a Digital Earth are either here or under development. Of course, the capabilities of a Digital Earth will continue to evolve over time. What we will be able to do in 2005 will look primitive compared to the Digital Earth of the year 2020. In 1998, the necessary technologies were: Computational Science, Mass Storage, Satellite Imagery, Broadband networks, Interoperability, and Metadata.

He anticipated change: "Of course, further technological progress is needed to realize the full potential of the Digital Earth, especially in areas such as automatic interpretation of imagery, the fusion of data from multiple sources, and intelligent agents that could find and link information on the Web about a particular spot on the planet. But enough of the pieces are in place right now to warrant proceeding with this exciting initiative.”

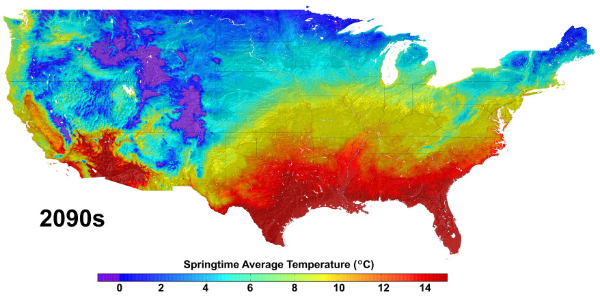

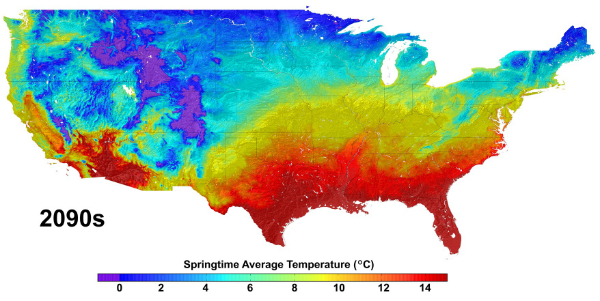

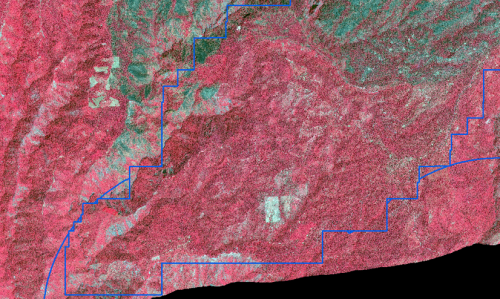

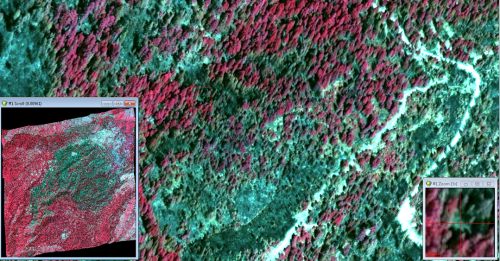

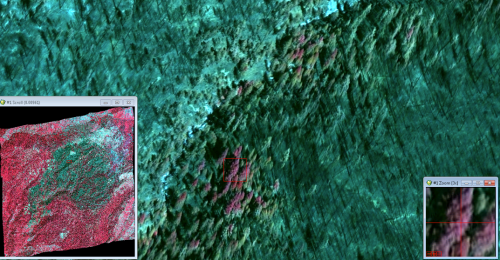

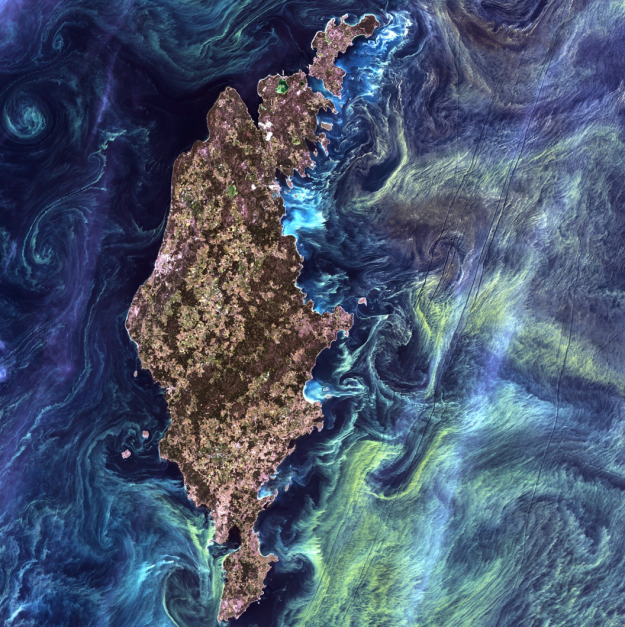

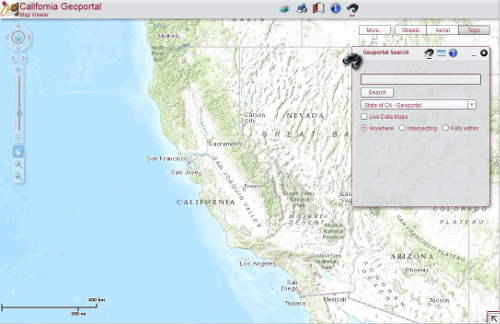

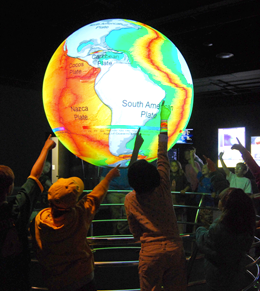

Example from NOAA's Science on a Sphere projectMuch has changed since he gave his talk, obviously. We have numerous examples of Virtual Globes for data exploration - for example, Google Earth, NASA’s WorldWind, ESRI’s ArcGIS Explorer, Bing Maps 3D, TerraExplorer, Marble. (These virtual examples are made tangible with NOAA's terrific Science on a Sphere project.)

Example from NOAA's Science on a Sphere projectMuch has changed since he gave his talk, obviously. We have numerous examples of Virtual Globes for data exploration - for example, Google Earth, NASA’s WorldWind, ESRI’s ArcGIS Explorer, Bing Maps 3D, TerraExplorer, Marble. (These virtual examples are made tangible with NOAA's terrific Science on a Sphere project.)

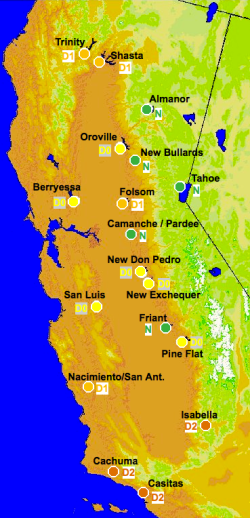

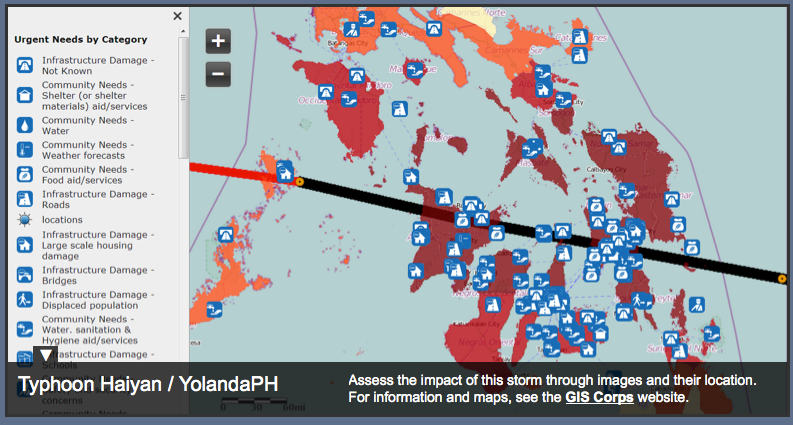

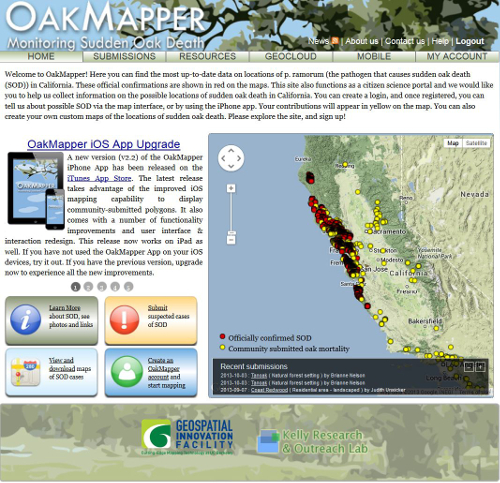

We also have realized a new vision of the Digital Earth that includes much more than immersive viewing of data. Today’s Digital Earth vision(s) include analytics and expertise for solving problems that are often cross-discplinary and large scale. Additionally, we make much more use today than was anticipated in 1998 from sensor networks and the geoweb (e.g. volunteered geographic information and croudsourcing). Examples of this multi-disciplinary Digital Earth concept include Google Earth Engine (and its recent forest loss product), Nasa Earth Exchange, and our own HOLOS.

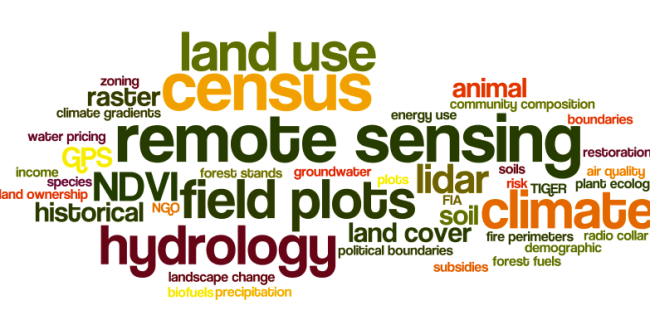

NSF has adopted this concept for their Earth Cube concept. Last year  NSF was looking for transformative concepts and approaches to create integrated data management infrastructures across the Geosciences. They were interested in the multifaceted challenges of modern, data-intensive science and education and envision an environment where low adoption thresholds and new capabilities act together to greatly increase the productivity and capability of researchers and educators working at the frontiers of Earth system science. I am not sure if this will be funded in 2014, but the concept reafirms that the concept of the Digital Earth is widespread and will likely be an important part of academia.

NSF was looking for transformative concepts and approaches to create integrated data management infrastructures across the Geosciences. They were interested in the multifaceted challenges of modern, data-intensive science and education and envision an environment where low adoption thresholds and new capabilities act together to greatly increase the productivity and capability of researchers and educators working at the frontiers of Earth system science. I am not sure if this will be funded in 2014, but the concept reafirms that the concept of the Digital Earth is widespread and will likely be an important part of academia.