Day 2 Wrap Up from the NEON Data Institute 2017

/First of all, Pearl Street Mall is just as lovely as I remember, but OMG it is so crowded, with so many new stores and chains. Still, good food, good views, hot weather, lovely walk.

Welcome to Day 2! http://neondataskills.org/data-institute-17/day2/

Our morning session focused on reproducibility and workflows with the great Naupaka Zimmerman. Remember the characteristics of reproducibility - organization, automation, documentation, and dissemination. We focused on organization, and spent an enjoyable hour sorting through an example messy directory of misc data files and code. The directory looked a bit like many of my directories. Lesson learned. We then moved to working with new data and git to reinforce yesterday's lessons. Git was super confusing to me 2 weeks ago, but now I think I love it. We also went back and forth between Jupyter and python stand alone scripts, and abstracted variables, and lo and behold I got my script to run. All the git stuff is from http://swcarpentry.github.io/git-novice/

The afternoon focused on Lidar (yay!) and prior to coding we talked about discrete and waveform data and collection, and the opentopography (http://www.opentopography.org/) project with Benjamin Gross. The opentopography talk was really interesting. They are not just a data distributor any more, they also provide a HPC framework (mostly TauDEM for now) on their servers at SDSC (http://www.sdsc.edu/). They are going to roll out a user-initiated HPC functionality soon, so stay tuned for their new "pluggable assets" program. This is well worth checking into. We also spent some time live coding with Python with Bridget Hass working with a CHM from the SERC site in California, and had a nerve-wracking code challenge to wrap up the day.

Fun additional take-home messages/resources:

- ISO International standard for dates = YYYY-MM-DD

- Missing values in R = NA, in Python = -9999

- For cleaning messy data - check out OpenRefine - a FOS tool for cleaning messy data http://openrefine.org/

- Excel is cray-cray, best practices for spreadsheets: http://www.datacarpentry.org/spreadsheet-ecology-lesson/

- Morpho (from DataOne) to enter metadata: https://www.dataone.org/software-tools/morpho

- Pay attention to file size with your git repositories - check out: https://git-lfs.github.com/. Git is good for things you do with your hands (like code), not for large data.

- Funny how many food metaphors are used in tech teaching: APIs as a menu in a restaurant; git add vs git commit as a grocery cart before and after purchase; finding GIS data is sometimes like shopping for ingredients in a specialty grocery store (that one is mine)...

- Markdown renderer: http://dillinger.io/

- MIT License, like Creative Commons for code: https://opensource.org/licenses/MIT

- "Jupyter" means it runs with Julia, Python & R, who knew?

- There is a new project called "Feather" that allows compatibility between python and R: https://blog.rstudio.org/2016/03/29/feather/

- All the NEON airborne data can be found here: http://www.neonscience.org/data/airborne-data

- Information on the TIFF specification and TIFF tags here: http://awaresystems.be/, however their TIFF Tag Viewer is only for windows.

Thanks to everyone today! Megan Jones (our fearless leader), Naupaka Zimmerman (Reproducibility), Tristan Goulden (Discrete Lidar), Keith Krause (Waveform Lidar), Benjamin Gross (OpenTopography), Bridget Hass (coding lidar products).

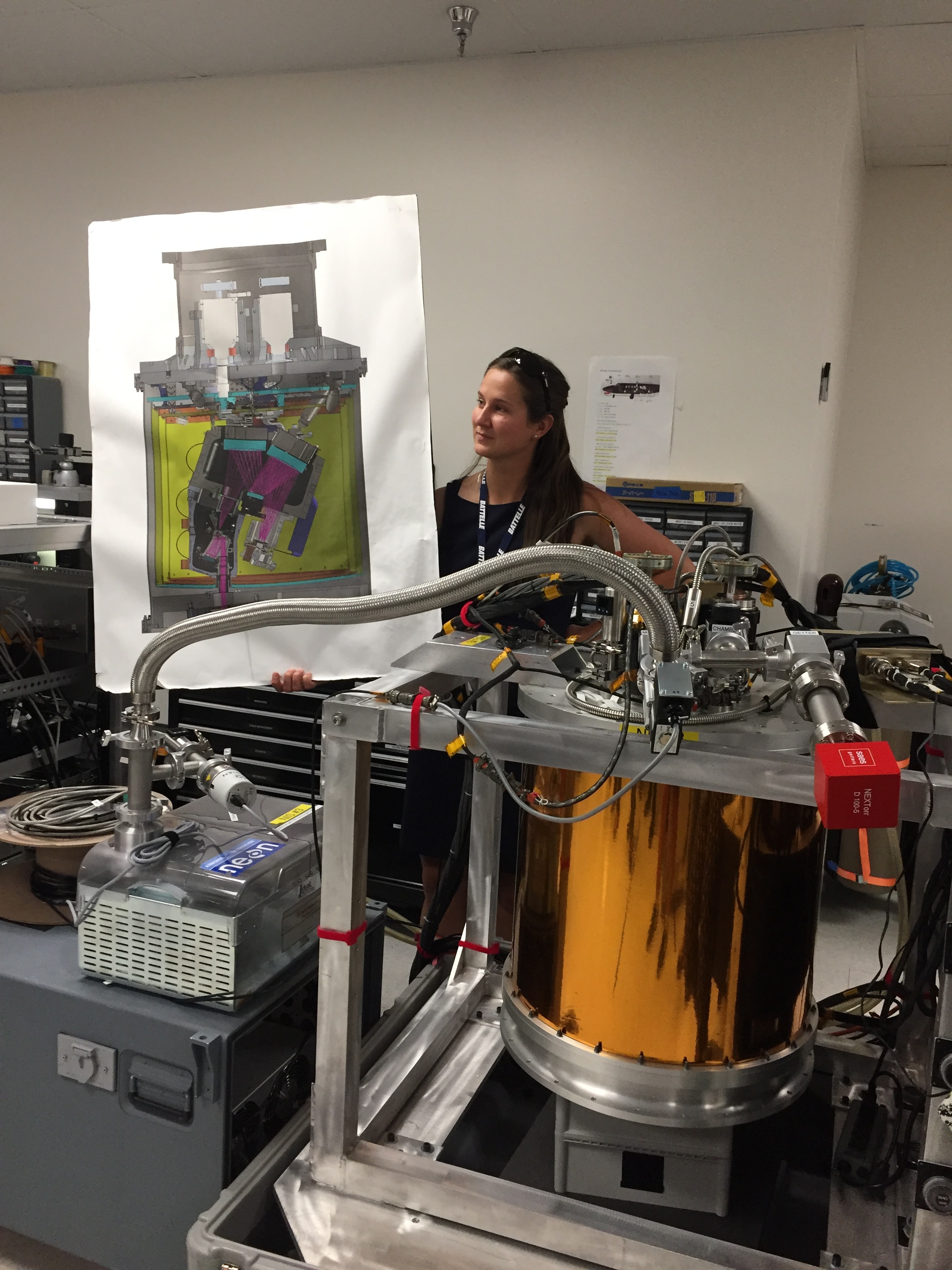

Our home for the week