I for one welcome our ESRI overloards: Wrap Up from the 2016 ESRI User Conference

/What a full week! Here is my wrap-up from a great 2016 ESRI User Conference.

I haven't been here in many years, and I am glad I came. I learned much, and have some new ideas for workshops and classes, and how IGIS can be more of service to ANR, and I got started on ArcGIS Pro - ESRI's eventual replacement for ArcGIS Desktop. Pro has a completely new user interface that is very clear; you can visualize, edit, and perform analysis in both 2D and 3D; it is super fast via multithreading & 64-bit processing (finally), and it has new icons and a bunch of new processing tools. A bunch of very cool stuff comes with 1.3 soon.

Day 1: Monday was spent in big-picture, inspirational talks from camera beautiful GIS people. 15,000 in the audience, 10 massive screens. I loved it, I felt like I was at a really intense but sedate rock concert. Note to ESRI: could you put the chairs any closer together? The highlight was speaker keynote Andrea Wulf, talking about her obsession with Alexander Von Humboldt. Note to self: get the book. In addition to the big picture stuff, Day 1 is ESRI's chance to highlight this year's software improvements as we continue the voyage away from the desktop workflow: Pro, integrated 3D, green design, Apps, seamless integration with the web.

Day 2: Tuesday focused on workshops. I picked four workshops from the Spatial Statistics Team at ESRI. These were led by Lauren Bennett and her crew (Flora Vale, Jenora D'Acosta). Uniformly fantastic. I had downloaded Pro the night before, and with some trepidation got started and followed along. I am happy to report that it seems very intuitive. I have read elsewhere about worries that there is loss of cartographic control, and I will look into that. I learned about the Spatial Stats toolbox in Pro, and some very new capacity in optimization of pattern analysis (you know how difficult it is to pick that distance kernel), and in the new space-time cube capabilities. The space-time capabilities make very complex analyses doable, and are very exciting, but still a bit constraining if you don't know how to update the code. Oh yeah, and space-time uses netCDF format.

Day 3: For Wednesday's workshops I chose workshops that would help me update class labs: Python + Raster analysis; Site suitability in Pro; Cost Connectivity in Pro; and some crazy cool prediction tool called Empirical Baysien Kriging, which I will be checking out. I guess EBK has been around for awhile, but now implemented in ESRI software. The new suite of tools in site suitability + connectivity are going to be key. Kevin M. Johnston and Elizabeth Graham led the Site Suitability and Connectivity, and Eric Krause led the Kriging workshop.

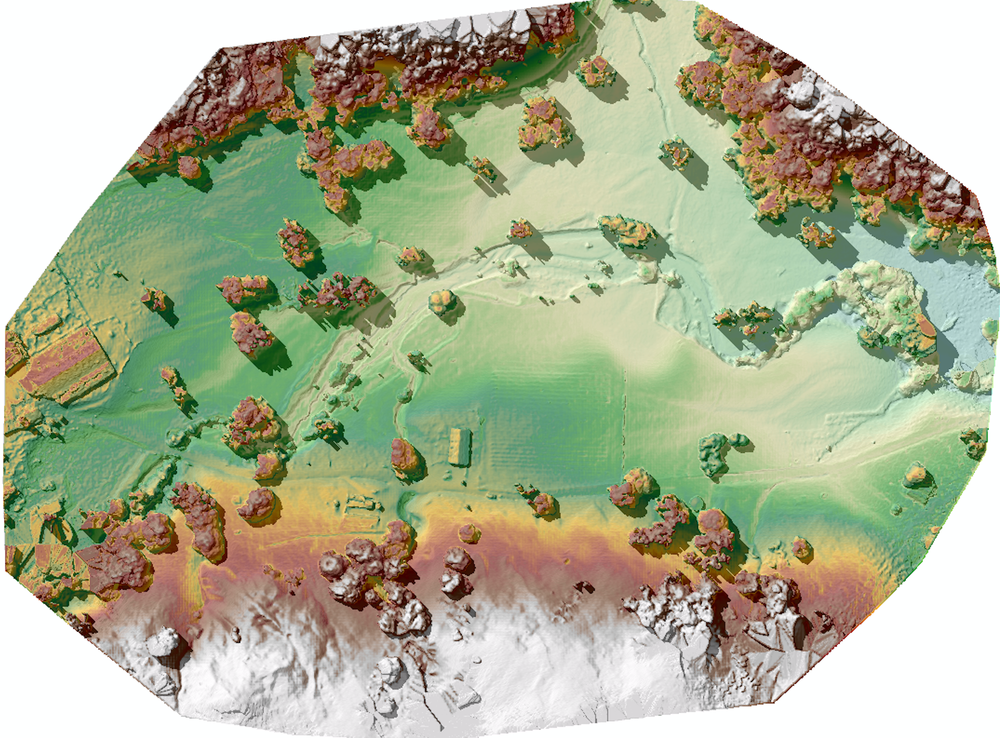

Day 4: All day was spent in Pix4D immersion with the excellent Pix4D support/training team. Pix4D is the gold standard for drone imagery workflow; it also serves as the backend engine for ESRI's Drone2Map application, which I have not tried. Most of the morning was spent in basics: workflow basics, application domains, super wow factor examples like 0.5cm resolution imagery processing. We also looked at workflow and best practices, accuracy, and some example projects. The room was full of 50+ people, many with specific questions about a range of projects. Got to hang out a bit with Greg Crustinger, who is now at Parrot. Even more excited now about our new Sequoia cameras.

Thoughts:

- Little Italy has some great restaurants.

- We need to move to Pro soon. Probably not in time for Fall's class, but soon. GIF and IGIS workshops are going to have to get updated.

- I need to get more in touch with imagery analysis in Pro. Especially with the segmentation and classification part.

- I want to recreate the workflow for site suitability + locate regions + cost connectivity soon.

- The ability to perfom complex analyses in a GUI is increasingly easy, but is that a good thing? We have to be increasingly vigilant about training the fundamentals as well.

- One frustration about these workshops that I bet my workshop participants share - the data all is perfect, and ready to go. We need to keep talking about where to get data, and how to wrangle it into shape.

- Could drones mean the resurrection of photogrammetry? At least in the classroom?

Trends:

- Hyper granularity: how do we processes these increasingly fine resolution datasets?

- Global to local focus in modeling: GWR, optimized Getis-Ord, empirical baysien kriging all try to deal with and model local variability across a complex study area;

- Incorporation of permutations and distribution functions in modeling has been made way easier;

- Big Data, multidimensional data, temporal data: ESRI is really trying to be a better informatics platform for research;

- ESRI seems to be increasingly relying on external and open standards for new data formats/products; this is a great trend;

- Decision-making: all these analyses need to support decision-making; communication remains critical, tools for web-based interaction continue to expand.