Earlier this week we in ESPM heard a report from the folks in the SWIRL marketing team, who have been working to extract the essence of what we do in ESPM and in CNR. Their proposed tagline for us is: "See the bigger picture. Make a better world." Which aptly describes what we do in applied geospatial sciences. I kinda wish I'd thought it up myself. And since this summer marks the 40th anniversary of the Landsat program, I thought I'd use this post to talk about how our ability to observe the earth from space does indeed fit this new tagline.

Earlier this week we in ESPM heard a report from the folks in the SWIRL marketing team, who have been working to extract the essence of what we do in ESPM and in CNR. Their proposed tagline for us is: "See the bigger picture. Make a better world." Which aptly describes what we do in applied geospatial sciences. I kinda wish I'd thought it up myself. And since this summer marks the 40th anniversary of the Landsat program, I thought I'd use this post to talk about how our ability to observe the earth from space does indeed fit this new tagline.

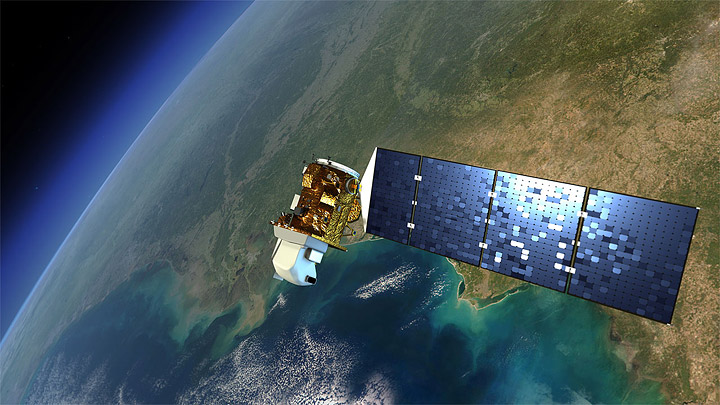

July 23, 1972 ERTS Earth Resources Technology Satellite (ERTS), later christened Landsat 1, was launched into a near-polar orbit. We had our first earth-watching, civilian science satellite. ERTS instruments recorded information in four spectral bands: red, green, and two infrared.

Remote sensing missions have continued through the decades that followed, making modern earth system science, landscape ecology, agriculture prediction, and many other fields possible. The Landsat missions continue with some blips: Landsat2 was launched in 1975, Landsat 3 in 1978; Landsat 4 in 1982 and Landsat 5 in 1984; in 1993 funds were found to keep Landsat 4 and 5 operational just before Landsat 6 failed upon launch in 1993 and ended up in the Indian Ocean. Landsat 5 only recently gave out after 27 year of imaging; Landsat 7, launched in 1999 continues its work as well. The eighth satellite, dubbed the Landsat Data Continuity Mission (LDCM), is scheduled for launch in 2013. It will be the next chapter for the longest-operating Earth-observing program in the world. More information here: http://earthobservatory.nasa.gov.

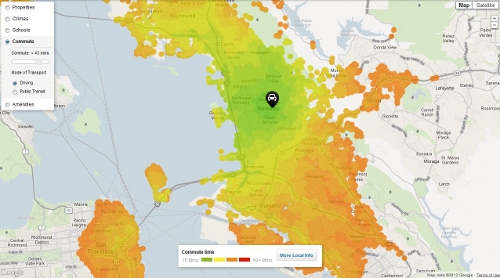

Landsat 7 is entirely government owned and operated, and after launch, the USGS was charged with distributing the data at government (nonprofit) rates. Today, the USGS distributes Landsat data over the Internet for free, and usage has exploded. Back in the day, we had to pay for each scene individually. This tended to limit the ability to work at regional, let alone global scales. The new model of data distribution has made a number of on-line resources and visuzalizations possible. Additionally, there are currently a quarter of a million science citations that use Landsat imagery, focusing on agriculture, oceans, land change, urban and natural areas.

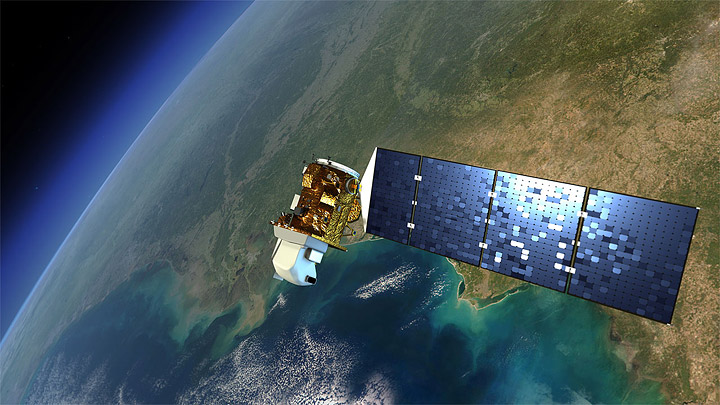

The first fully operational Landsat image taken on July 25, 1972, inaugurating a 40-year run when the first satellite was known as the Earth Resources Technology Satellite, or ERTS. Credit: NASA’s Earth Observatory

The first fully operational Landsat image taken on July 25, 1972, inaugurating a 40-year run when the first satellite was known as the Earth Resources Technology Satellite, or ERTS. Credit: NASA’s Earth Observatory

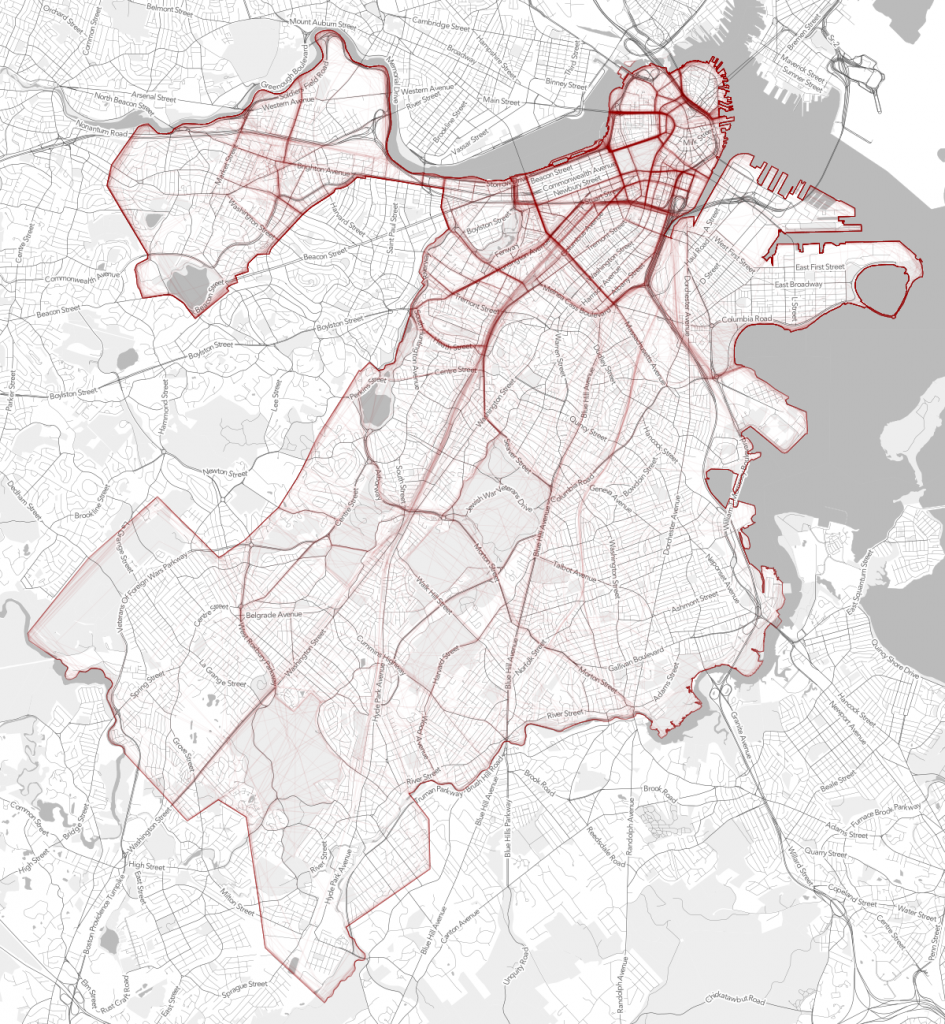

This image above was the first image from the Landsat program. It shows Dallas, TX. Check out those reservoirs!

Some nice write-ups about Landsat:

Landsat imagery:

Happy Fall Semester 2012!