Measuring impact in extension programming: case study of IGIS

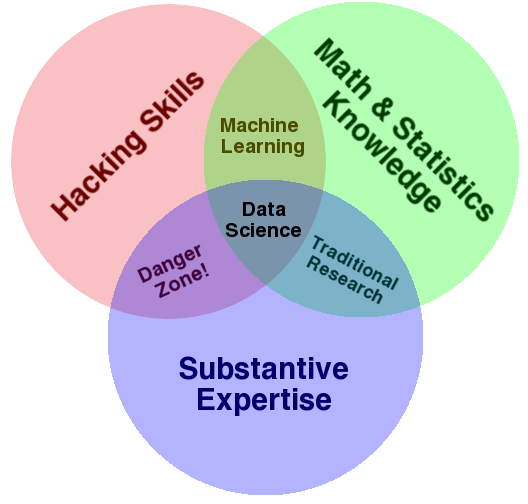

/At UC Berkeley and at UC ANR, my outreach program involves the creation, integration, and application of research-based technical knowledge for the benefit of the public, policy-makers, and land managers. My work focuses on environmental management, vegetation change, vegetation monitoring, and climate change. Critical to my work is the ANR Statewide Program in Informatics and GIS (IGIS), which I began in 2012 and is now really cranking with our crack team of IGIS people. We developed the IGIS program in 2012 to provide research technology and data support for ANR’s mission objectives through the analysis and visualization of spatial data. We use state-of-the-art web, database and mapping technology to provide acquisition, storage, and dissemination of large data sets critical to the ANR mission. We develop and delivers training on research technologies related to important agricultural and natural resource issues statewide. We facilitate networking and collaboration across ANR and UC on issues related to research technology and data. And we deliver research support through a service center for project level work that has Division-wide application. Since I am off on sabbatical, I have decided to take some time to think about my outreach program and how evaluate its impact.

There is a great literature about the history of extension since its 1914 beginnings, and specifically about how extension programs around the nation have been measuring impact. Extension has explored a variety of ways to measure the value of engagement for the public good (Franz 2011, 2014). Early attempts to measure performance focused on activity and reach: the number of individuals served and the quality of the interaction with those individuals. Through time, extension began to turn their attention to program outcomes. Recently, we’ve been focusing on articulating the Public Values of extension, via Condition Change metrics (Rennekamp and Engle 2008). One popular evaluation method has been the Logic Model, used by extension educators to evaluate the effectiveness of a program through the development of a clear workflow or plan that links program outcomes or impacts with outputs, activities and inputs. We’ve developed a fair number of these models for the Sierra Nevada Adaptive Management Program (SNAMP) for example. Impacts include measures of changes in learning, behavior, or condition change across engagement efforts. Recently, change in policy became an additional measure to evaluate impact. I also think measuring reach is needed, and possible.

So, just to throw it out there, here is my master table of impact that I try to use for measuring and evaluating impact of my outreach program, and I’d be interested to hear what you all think of it.

- Change in reach: Geographic scope, Location of events, Number of users, etc.

- Change in activity: Usage, Engagement with a technology, New users, Sessions, Average session duration

- Change in learning; Participants have learned something new from delivered content

- Change in action, behavior, method; New efficiencies, Streamlined protocols, Adoption of new data, Adoption of best practices

- Change in policy; Evidence of contributions to local, state, or federal regulations

- Change in outcome: measured conditions have improved = condition change

I recently used this framework to help me think about impact of the IGIS program, and I share some results here.

Measuring Reach. The IGIS program has developed and delivered workshops throughout California, through the leadership of Sean Hogan, Shane Feirer, and Andy Lyons (http://igis.ucanr.edu/IGISTraining). We manage and track all this activity through a custom data tracking dashboard that IGIS developed (using Google Sheets as a database linked to ArcGIS online to render maps - very cool), and thus can provide key metrics about our reach throughout California. Together, we have delivered 52 workshops across California since July 2015 and reached nearly 800 people. These include workshops on GIS for Forestry, GIS for Agriculture, Drone Technology, WebGIS, Mobile Data Collection, and other topics. This is an impressive record of reach: these workshops have served audiences throughout California. We have delivered workshops from Humboldt to the Imperial Valley, and the attendees (n=766) have come from all over California. Check this map out:

Measuring Impact. At each workshop, we provide a feedback mechanism via an evaluation form and use this input to understand client satisfaction, reported changes in learning, and reported changes in participant workflow. We’ve been doing this for years, but I now think the questions we ask on those surveys need to change. We are really capturing the client satisfaction part of the process, and we need to do a better job on the change in learning and change in action parts of the work.

Having done this exercise, I can clearly see that measuring reach and activity are perhaps the easiest things to measure. We have information tools at our fingertips to do this: online web mapping of participant zip-codes, google analytics to track website activity. Measuring the other impacts: change in action, contributions to policy and actual condition changes are tough. I think extension will continue to struggle with these, but they are critical to help us articulate our value to the public. More work to do!

References

Franz, Nancy K. 2011. “Advancing the Public Value Movement: Sustaining Extension During Tough Times.” Journal of Extension 49 (2): 2COM2.

———. 2014. “Measuring and Articulating the Value of Community Engagement: Lessons Learned from 100 Years of Cooperative Extension Work.” Journal of Higher Education Outreach and Engagement 18 (2): 5.

Rennekamp, Roger A., and Molly Engle. 2008. “A Case Study in Organizational Change: Evaluation in Cooperative Extension.” New Directions for Evaluation 2008 (120): 15–26.